“OLR Challenge 2019”版本间的差异

(→Ranking list) |

|||

| (2位用户的41个中间修订版本未显示) | |||

| 第2行: | 第2行: | ||

Oriental languages involve interesting specialties. The OLR challenge series aim at boosting language recognition technology for oriental languages. | Oriental languages involve interesting specialties. The OLR challenge series aim at boosting language recognition technology for oriental languages. | ||

| − | Following the success of [[OLR Challenge | + | Following the success of [[OLR Challenge 2016]], [[OLR Challenge 2017]] and [[OLR Challenge 2018]], the new challenge in 2019 follows the same theme, but sets up more challenging tasks in the sense of: |

* Task 1: Short-utterance LID, where the test utterances are as short as 1 second. | * Task 1: Short-utterance LID, where the test utterances are as short as 1 second. | ||

| 第14行: | 第14行: | ||

==News== | ==News== | ||

| + | * [https://share.weiyun.com/5MkiBcv Groundtruth] for test released. | ||

| + | * Test data for 3 tasks released (if you haven't received the email about downloading, please ask us). | ||

* Challenge registration open. | * Challenge registration open. | ||

| 第19行: | 第21行: | ||

The challenge is based on two multilingual databases, AP16-OL7 that was designed for the OLR challenge 2016, and AP17-OL3 database that was designed for the OLR challenge 2017. | The challenge is based on two multilingual databases, AP16-OL7 that was designed for the OLR challenge 2016, and AP17-OL3 database that was designed for the OLR challenge 2017. | ||

| + | For AP19-OLR, a development set AP19-OLR-dev is also provided. | ||

AP16-OL7 is provided by SpeechOcean (www.speechocean.com), and AP17-OL3 is provided by Tsinghua University, Northwest Minzu University and Xinjiang University, under the [http://cslt.riit.tsinghua.edu.cn/mediawiki/index.php/Asr-project-nsfc M2ASR project] supported by NSFC. | AP16-OL7 is provided by SpeechOcean (www.speechocean.com), and AP17-OL3 is provided by Tsinghua University, Northwest Minzu University and Xinjiang University, under the [http://cslt.riit.tsinghua.edu.cn/mediawiki/index.php/Asr-project-nsfc M2ASR project] supported by NSFC. | ||

| − | |||

The features for AP16-OL7 involve: | The features for AP16-OL7 involve: | ||

| 第42行: | 第44行: | ||

* The data profile is [[媒体文件:User Agreement-AP17-OL3-Format.pdf|here]] | * The data profile is [[媒体文件:User Agreement-AP17-OL3-Format.pdf|here]] | ||

* The License for the data is [[AP17-OL3-licence|here]] | * The License for the data is [[AP17-OL3-licence|here]] | ||

| + | |||

| + | AP19-OLR-dev is provided for the development of the 3 tasks respectively: | ||

| + | * Task 1: AP19-OLR-dev doesn't include short-duration speech data specifically, but short-duration test set in previous challenges can be used for development. | ||

| + | * Task 2: for the cross-channel task, AP19-OLR-dev includes a subset which contains 500 utterances for per target language. | ||

| + | * Task 3: in the zero-resource subset of AP19-OLR-dev, 3 new languages are provided with 5 utterances for enrollment and 500 for test. Note that the 3 languages in the final test are different from those in the development. | ||

==Evaluation plan== | ==Evaluation plan== | ||

| 第47行: | 第54行: | ||

Refer to the following paper: | Refer to the following paper: | ||

| − | Zhiyuan Tang, Dong Wang, Liming Song: AP19-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2019. | + | Zhiyuan Tang, Dong Wang, Liming Song: AP19-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2019. [https://arxiv.org/pdf/1907.07626.pdf pdf] |

| − | + | ||

| − | + | ||

==Evaluation tools== | ==Evaluation tools== | ||

| 第65行: | 第70行: | ||

'''Zhiyuan Tang, Dong Wang, Qing Chen: AP18-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2018.''' [https://arxiv.org/pdf/1806.00616.pdf pdf] | '''Zhiyuan Tang, Dong Wang, Qing Chen: AP18-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2018.''' [https://arxiv.org/pdf/1806.00616.pdf pdf] | ||

| − | '''Zhiyuan Tang, Dong Wang, Liming Song: AP19-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2019.''' | + | '''Zhiyuan Tang, Dong Wang, Liming Song: AP19-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2019.''' [https://arxiv.org/pdf/1907.07626.pdf pdf] |

| − | + | ||

| − | + | ||

==Important dates== | ==Important dates== | ||

| 第80行: | 第83行: | ||

If you intend to participate the challenge, or if you have any questions, comments or suggestions about the challenge, please send email to the organizers ( | If you intend to participate the challenge, or if you have any questions, comments or suggestions about the challenge, please send email to the organizers ( | ||

| − | olr19@cslt.org). For participants, the following information is required: | + | '''<span style="color:red">olr19@cslt.org</span>'''). For participants, the following information is required, also please sign the '''[[媒体文件:Olr19_data_license.pdf | <span style="color:red"> Data License Agreement</span>]]''' on behalf of an organization/company of speech research/technology, |

| + | and send back the scanned copy by email. | ||

- Team Name: | - Team Name: | ||

| 第86行: | 第90行: | ||

- Participants: | - Participants: | ||

- Duty person: | - Duty person: | ||

| + | - Corresponding email: | ||

- Hompage of person/organization/company: | - Hompage of person/organization/company: | ||

(If homepage is not available, any of your online papers in speech field are feasible.) | (If homepage is not available, any of your online papers in speech field are feasible.) | ||

| − | == | + | ==Organization Committee== |

| − | |||

* Zhiyuan Tang, Tsinghua University [[http://tangzy.cslt.org home]] | * Zhiyuan Tang, Tsinghua University [[http://tangzy.cslt.org home]] | ||

| + | * Dong Wang, Tsinghua University [[http://wangd.cslt.org home]] | ||

| + | * Qingyang Hong, Xiamen University | ||

| + | * Ming Li, Duke-Kunshan University | ||

| + | * Xiaolei Zhang, NWPU | ||

* Liming Song, SpeechOcean | * Liming Song, SpeechOcean | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | =Ranking list= | ||

| + | The Oriental Language Recognition (OLR) Challenge 2019, co-organized by CSLT@Tsinghua University, Xiamen University, Duke-Kunshan University, Northwestern Polytechnical University and Speechocean, was completed with a great success. | ||

| + | The results have been published in the APSIPA ASC, Nov 18-21, 2019, Lanzhou, China. | ||

| + | |||

| + | === Overview === | ||

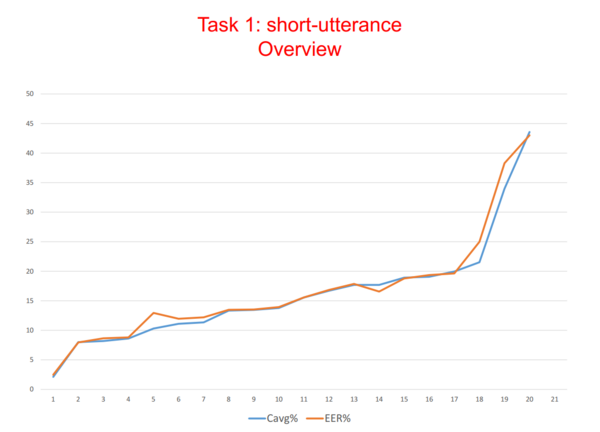

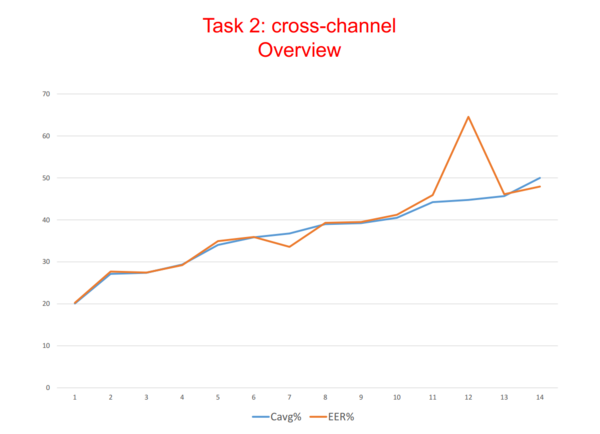

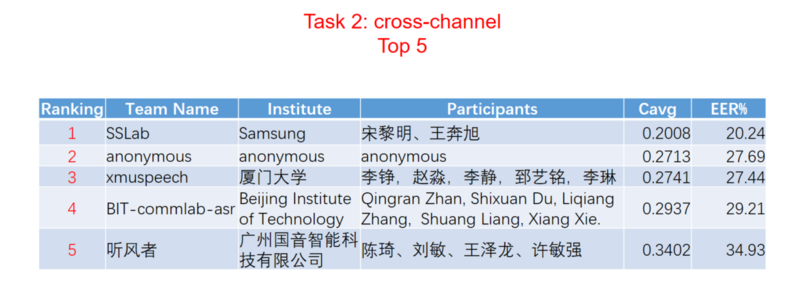

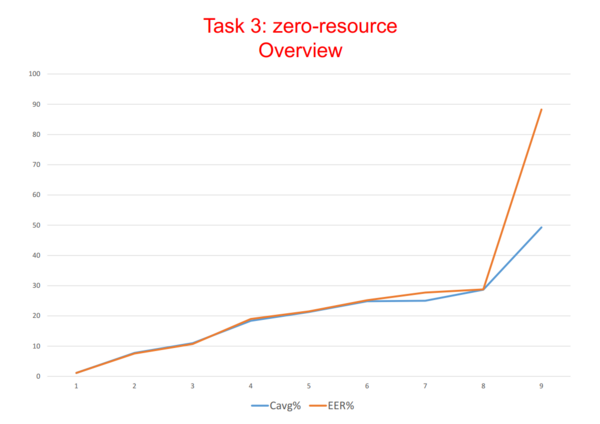

| + | There are totally <span style="color:red"> '''45'''</span> teams that registered this challenge. | ||

| + | Until the deadline of submission, <span style="color:red">'''20+'''</span> teams submitted their results. | ||

| + | The submissions have been ranked in terms of the 3 language recognition tasks respectively, | ||

| + | one is short-utterance identification task, the second one is cross-channel identification task, and the third one is zero-resource identification task. | ||

| + | We just present team information of the top 5 ones. | ||

| + | |||

| + | More details and history about the challenge, see [[媒体文件:APSIPA_2019_OLR.pdf | slides]]. | ||

| + | |||

| + | === Task 1 === | ||

| + | |||

| + | [[文件:olr19-1-overview.png | 600px]] | ||

| + | |||

| + | [[文件:olr19-1-5.png | 800px]] | ||

| + | |||

| + | |||

| + | === Task 2 === | ||

| + | |||

| + | [[文件:olr19-2-overview.png | 600px]] | ||

| + | |||

| + | [[文件:olr19-2-5.png | 800px]] | ||

| + | |||

| + | |||

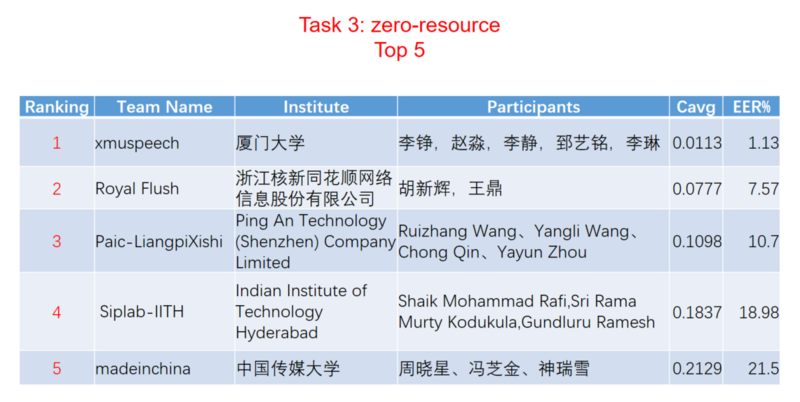

| + | === Task 3 === | ||

| + | |||

| + | [[文件:olr19-3-overview.png | 600px]] | ||

| + | |||

| + | [[文件:olr19-3-5.png | 800px]] | ||

| + | |||

| + | |||

| + | |||

| + | === Top system description === | ||

| + | *[[媒体文件:AP19-OLR Innovem system.pdf | Descriptions]] (in Chinese) from Innovem. | ||

| + | *[[媒体文件:AP19-OLR xmuspeech system.pdf | Descriptions]] from xmuspeech. | ||

| + | *[[媒体文件:AP19-OLR SSLab system.pdf | Descriptions]] from SSLab. | ||

2019年11月22日 (五) 08:34的最后版本

目录

Oriental Language Recognition (OLR) 2019 Challenge

Oriental languages involve interesting specialties. The OLR challenge series aim at boosting language recognition technology for oriental languages. Following the success of OLR Challenge 2016, OLR Challenge 2017 and OLR Challenge 2018, the new challenge in 2019 follows the same theme, but sets up more challenging tasks in the sense of:

- Task 1: Short-utterance LID, where the test utterances are as short as 1 second.

- Task 2: Cross-channel LID, where test data is in different channels from the training set.

- Task 3: Zero-resource LID, where no resources are provided for training before inference, but several reference utterances are provided for each language.

We will publish the results on a special session of APSIPA ASC 2019.

News

- Groundtruth for test released.

- Test data for 3 tasks released (if you haven't received the email about downloading, please ask us).

- Challenge registration open.

Data

The challenge is based on two multilingual databases, AP16-OL7 that was designed for the OLR challenge 2016, and AP17-OL3 database that was designed for the OLR challenge 2017. For AP19-OLR, a development set AP19-OLR-dev is also provided.

AP16-OL7 is provided by SpeechOcean (www.speechocean.com), and AP17-OL3 is provided by Tsinghua University, Northwest Minzu University and Xinjiang University, under the M2ASR project supported by NSFC.

The features for AP16-OL7 involve:

- Mobile channel

- 7 languages in total

- 71 hours of speech signals in total

- Transcriptions and lexica are provided

- The data profile is here

- The License for the data is here

The features for AP17-OL3 involve:

- Mobile channel

- 3 languages in total

- Tibetan provided by Prof. Guanyu Li@Northwest Minzu Univ.

- Uyghur and Kazak provided by Prof. Askar Hamdulla@Xinjiang University.

- 35 hours of speech signals in total

- Transcriptions and lexica are provided

- The data profile is here

- The License for the data is here

AP19-OLR-dev is provided for the development of the 3 tasks respectively:

- Task 1: AP19-OLR-dev doesn't include short-duration speech data specifically, but short-duration test set in previous challenges can be used for development.

- Task 2: for the cross-channel task, AP19-OLR-dev includes a subset which contains 500 utterances for per target language.

- Task 3: in the zero-resource subset of AP19-OLR-dev, 3 new languages are provided with 5 utterances for enrollment and 500 for test. Note that the 3 languages in the final test are different from those in the development.

Evaluation plan

Refer to the following paper:

Zhiyuan Tang, Dong Wang, Liming Song: AP19-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2019. pdf

Evaluation tools

- The Kaldi-based baseline scripts here

Participation rules

- Participants from both academy and industry are welcome

- Publications based on the data provided by the challenge should cite the following paper:

Dong Wang, Lantian Li, Difei Tang, Qing Chen, AP16-OL7: a multilingual database for oriental languages and a language recognition baseline, APSIPA ASC 2016. pdf

Zhiyuan Tang, Dong Wang, Yixiang Chen, Qing Chen: AP17-OLR Challenge: Data, Plan, and Baseline, APSIPA ASC 2017. pdf

Zhiyuan Tang, Dong Wang, Qing Chen: AP18-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2018. pdf

Zhiyuan Tang, Dong Wang, Liming Song: AP19-OLR Challenge: Three Tasks and Their Baselines, submitted to APSIPA ASC 2019. pdf

Important dates

- July. 16, AP19-OLR training/dev data release.

- Oct. 1, register deadline.

- Oct. 20, test data release.

- Nov. 1, 24:00, Beijing time, submission deadline.

- APSIPA ASC 2019 (18 Nov), results announcement.

Registration procedure

If you intend to participate the challenge, or if you have any questions, comments or suggestions about the challenge, please send email to the organizers ( olr19@cslt.org). For participants, the following information is required, also please sign the Data License Agreement on behalf of an organization/company of speech research/technology, and send back the scanned copy by email.

- Team Name: - Institute: - Participants: - Duty person: - Corresponding email: - Hompage of person/organization/company: (If homepage is not available, any of your online papers in speech field are feasible.)

Organization Committee

- Zhiyuan Tang, Tsinghua University [home]

- Dong Wang, Tsinghua University [home]

- Qingyang Hong, Xiamen University

- Ming Li, Duke-Kunshan University

- Xiaolei Zhang, NWPU

- Liming Song, SpeechOcean

Ranking list

The Oriental Language Recognition (OLR) Challenge 2019, co-organized by CSLT@Tsinghua University, Xiamen University, Duke-Kunshan University, Northwestern Polytechnical University and Speechocean, was completed with a great success. The results have been published in the APSIPA ASC, Nov 18-21, 2019, Lanzhou, China.

Overview

There are totally 45 teams that registered this challenge. Until the deadline of submission, 20+ teams submitted their results. The submissions have been ranked in terms of the 3 language recognition tasks respectively, one is short-utterance identification task, the second one is cross-channel identification task, and the third one is zero-resource identification task. We just present team information of the top 5 ones.

More details and history about the challenge, see slides.

Task 1

Task 2

Task 3

Top system description

- Descriptions (in Chinese) from Innovem.

- Descriptions from xmuspeech.

- Descriptions from SSLab.