“Phonetic Temporal Neural LID”版本间的差异

| (相同用户的3个中间修订版本未显示) | |||

| 第1行: | 第1行: | ||

=Project name= | =Project name= | ||

| − | Phonetic Temporal Neural Model for Language Identification | + | Phonetic Temporal Neural (PTN) Model for Language Identification |

| + | |||

=Project members= | =Project members= | ||

Dong Wang, Zhiyuan Tang, Lantian Li, Ying Shi | Dong Wang, Zhiyuan Tang, Lantian Li, Ying Shi | ||

| − | |||

| − | |||

| + | =Introduction= | ||

Deep neural models, particularly the LSTM-RNN | Deep neural models, particularly the LSTM-RNN | ||

model, have shown great potential for language identification | model, have shown great potential for language identification | ||

| 第13行: | 第13行: | ||

overlooked by most existing neural LID methods, although this | overlooked by most existing neural LID methods, although this | ||

information has been used very successfully in conventional | information has been used very successfully in conventional | ||

| − | phonetic LID systems. | + | phonetic LID systems. In this project, we present a phonetic temporal neural (PTN) |

model for LID, which is an LSTM-RNN LID system that accepts | model for LID, which is an LSTM-RNN LID system that accepts | ||

phonetic features produced by a phone-discriminative DNN as | phonetic features produced by a phone-discriminative DNN as | ||

| 第19行: | 第19行: | ||

similar to traditional phonetic LID methods, but the phonetic | similar to traditional phonetic LID methods, but the phonetic | ||

knowledge here is much richer: it is at the frame level and | knowledge here is much richer: it is at the frame level and | ||

| − | involves compacted information of all phones. | + | involves compacted information of all phones. |

| − | + | The PTN model significantly outperforms existing acoustic neural | |

| − | + | ||

| − | + | ||

models. It also outperforms the conventional i-vector approach | models. It also outperforms the conventional i-vector approach | ||

on short utterances and in noisy conditions. | on short utterances and in noisy conditions. | ||

| 第29行: | 第27行: | ||

=Phonetic feature= | =Phonetic feature= | ||

| + | All the present neural LID methods are based on acoustic | ||

| + | features, e.g., Mel filter banks (Fbanks) or Mel frequency cepstral coefficients (MFCCs), with phonetic information | ||

| + | largely overlooked. This may have significantly hindered the | ||

| + | performance of neural LID. Intuitively, it is a long-standing hypothesis that languages can be discriminated between by phonetic properties, either distributional or temporal; additionally, | ||

| + | phonetic features represent information at a higher level than acoustic features, and so are more invariant with respect to noise | ||

| + | and channels. | ||

| + | |||

| + | [[文件:Phonetic-feat.png|400px]] | ||

| + | |||

| + | * Phonetic DNN: the acoustic model of an ASR system. | ||

| + | * Phonetic features: the output of last hidden layer in phonetic model. | ||

| + | |||

| + | |||

| + | =Phone-aware model= | ||

| + | |||

| + | [[文件:Phone-aware.png|350px]] | ||

| + | |||

| + | Phone-aware LID consists of a phonetic DNN (left) to produce phonetic features | ||

| + | and an LID RNN (right) to make LID decisions. | ||

| + | The LID RNN receives both phonetic feature and acoustic feature as input. | ||

| + | |||

| + | |||

| + | [[文件:Phone-aware-sys.png|400px]] | ||

| + | |||

| + | The phonetic feature is read from | ||

| + | the last hidden layer of the phonetic DNN which is a TDNN. | ||

| + | The phonetic feature is then propagated to the g function for | ||

| + | the phonetically aware RNN LID system, with acoustic feauture as the LID system's input. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | =Phonetic Temporal Neural (PTN) model= | ||

| + | |||

| + | [[文件:Ptn.png|400px]] | ||

| + | |||

| + | PTN model consists of a phonetic DNN (left) to produce phonetic features | ||

| + | and an LID RNN (right) to make LID decisions. | ||

| + | The LID RNN only receives phonetic feature as input. | ||

| + | |||

| + | |||

| + | |||

| + | [[文件:Ptn-sys.png|400px]] | ||

| + | |||

| + | The phonetic feature is read from | ||

| + | the last hidden layer of the phonetic DNN which is a TDNN. | ||

| + | The phonetic feature is then propagated to the g function for | ||

| + | the phonetically aware RNN LID system, and is the only input | ||

| + | for the PTN LID system. | ||

| + | |||

| + | |||

| + | |||

| + | =Performance= | ||

| + | |||

| + | |||

| + | ==On Babel database== | ||

| + | |||

| + | Babel contains seven languages: | ||

| + | Assamese, Bengali, Cantonese, Georgian, Pashto, Tagalog and Turkish. | ||

| + | |||

| + | [[文件:Ptn-babel.png|730px]] | ||

| + | |||

| + | |||

| + | ==On AP16-OLR database== | ||

| + | |||

| + | [http://cslt.riit.tsinghua.edu.cn/mediawiki/index.php/OLR_Challenge_2016 AP16-OLR] contains seven languages: | ||

| + | Mandarin, Cantonese, Indonesian, Japanese, Russian, Korean and Vietnamese. | ||

| + | |||

| + | [[文件:Ptn-ap16.png|700px]] | ||

| − | |||

=Research directions= | =Research directions= | ||

| + | * Multilingual ASR with language information. | ||

| + | * Joint training with multi-task Recurrent Model for ASR and LID. | ||

| + | * Multi-scale RNN LID. | ||

| − | |||

| − | |||

| − | |||

=Reference= | =Reference= | ||

| + | [1] Zhiyuan Tang, Dong Wang*, Yixiang Chen, Lantian Li and Andrew Abel. | ||

| + | Phonetic Temporal Neural Model for Language Identification. IEEE/ACM Transactions on Audio, Speech, and Language Processing. 2017. | ||

| + | |||

| + | [2] Zhiyuan Tang, Dong Wang*, Yixiang Chen, Ying Shi and Lantian Li. Phone-aware Neural Language Identification. O-COCOSDA 2017. [https://arxiv.org/pdf/1705.03152.pdf pdf] | ||

| + | |||

| + | [3] Zhiyuan Thang, Lantian Li, Dong Wang* and Ravi Vipperla. Collaborative Joint Training with Multi-task Recurrent Model for Speech and Speaker RecognitionIEEE/ACM Transactions on Audio, Speech, and Language Processing. 2017. [http://ieeexplore.ieee.org/document/7782371 online] | ||

2017年10月31日 (二) 11:45的最后版本

目录

Project name

Phonetic Temporal Neural (PTN) Model for Language Identification

Project members

Dong Wang, Zhiyuan Tang, Lantian Li, Ying Shi

Introduction

Deep neural models, particularly the LSTM-RNN model, have shown great potential for language identification (LID). However, the use of phonetic information has been largely overlooked by most existing neural LID methods, although this information has been used very successfully in conventional phonetic LID systems. In this project, we present a phonetic temporal neural (PTN) model for LID, which is an LSTM-RNN LID system that accepts phonetic features produced by a phone-discriminative DNN as the input, rather than raw acoustic features. This new model is similar to traditional phonetic LID methods, but the phonetic knowledge here is much richer: it is at the frame level and involves compacted information of all phones. The PTN model significantly outperforms existing acoustic neural models. It also outperforms the conventional i-vector approach on short utterances and in noisy conditions.

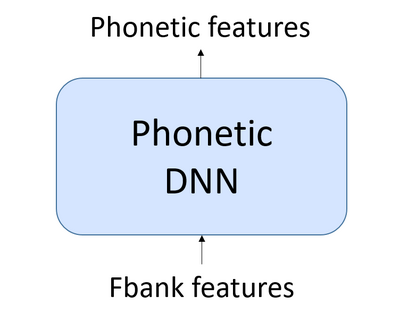

Phonetic feature

All the present neural LID methods are based on acoustic features, e.g., Mel filter banks (Fbanks) or Mel frequency cepstral coefficients (MFCCs), with phonetic information largely overlooked. This may have significantly hindered the performance of neural LID. Intuitively, it is a long-standing hypothesis that languages can be discriminated between by phonetic properties, either distributional or temporal; additionally, phonetic features represent information at a higher level than acoustic features, and so are more invariant with respect to noise and channels.

- Phonetic DNN: the acoustic model of an ASR system.

- Phonetic features: the output of last hidden layer in phonetic model.

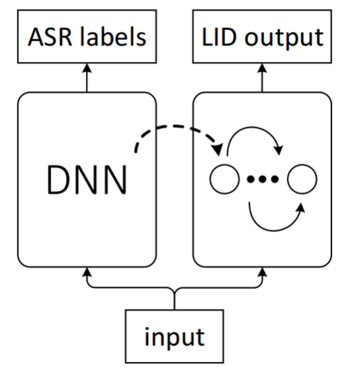

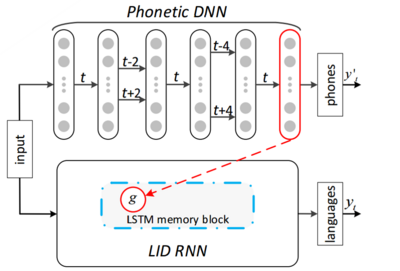

Phone-aware model

Phone-aware LID consists of a phonetic DNN (left) to produce phonetic features and an LID RNN (right) to make LID decisions. The LID RNN receives both phonetic feature and acoustic feature as input.

The phonetic feature is read from the last hidden layer of the phonetic DNN which is a TDNN. The phonetic feature is then propagated to the g function for the phonetically aware RNN LID system, with acoustic feauture as the LID system's input.

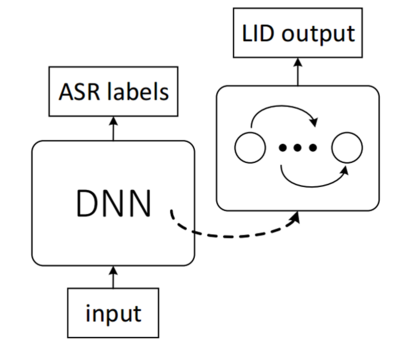

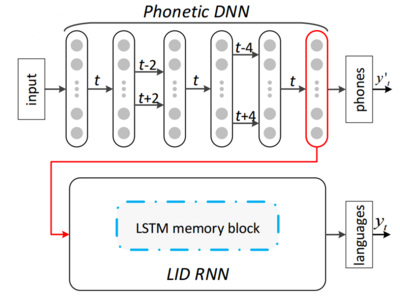

Phonetic Temporal Neural (PTN) model

PTN model consists of a phonetic DNN (left) to produce phonetic features and an LID RNN (right) to make LID decisions. The LID RNN only receives phonetic feature as input.

The phonetic feature is read from the last hidden layer of the phonetic DNN which is a TDNN. The phonetic feature is then propagated to the g function for the phonetically aware RNN LID system, and is the only input for the PTN LID system.

Performance

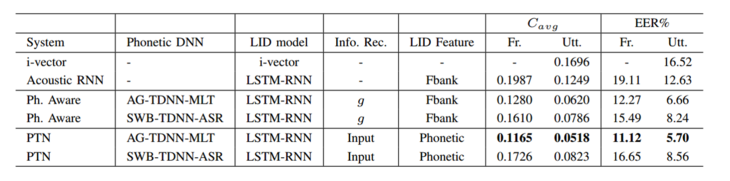

On Babel database

Babel contains seven languages: Assamese, Bengali, Cantonese, Georgian, Pashto, Tagalog and Turkish.

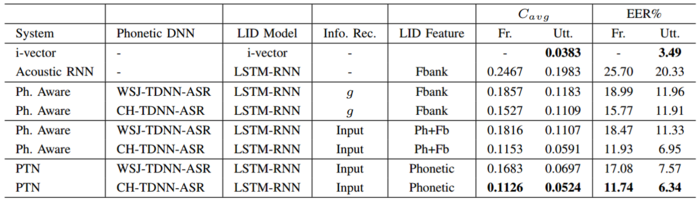

On AP16-OLR database

AP16-OLR contains seven languages: Mandarin, Cantonese, Indonesian, Japanese, Russian, Korean and Vietnamese.

Research directions

- Multilingual ASR with language information.

- Joint training with multi-task Recurrent Model for ASR and LID.

- Multi-scale RNN LID.

Reference

[1] Zhiyuan Tang, Dong Wang*, Yixiang Chen, Lantian Li and Andrew Abel. Phonetic Temporal Neural Model for Language Identification. IEEE/ACM Transactions on Audio, Speech, and Language Processing. 2017.

[2] Zhiyuan Tang, Dong Wang*, Yixiang Chen, Ying Shi and Lantian Li. Phone-aware Neural Language Identification. O-COCOSDA 2017. pdf

[3] Zhiyuan Thang, Lantian Li, Dong Wang* and Ravi Vipperla. Collaborative Joint Training with Multi-task Recurrent Model for Speech and Speaker RecognitionIEEE/ACM Transactions on Audio, Speech, and Language Processing. 2017. online