“Deep Generative Factorization For Speech Signal(ICASSP21)”版本间的差异

| 第64行: | 第64行: | ||

very little distortion on other factors. | very little distortion on other factors. | ||

| − | Manipulation by the mean-shift approach | + | Manipulation by the mean-shift approach: |

:x' = f(f<sup>−1</sup>(x) + µ<sub>A,c<sub>2</sub></sub> − µ<sub>A,c<sub>1</sub></sub>) | :x' = f(f<sup>−1</sup>(x) + µ<sub>A,c<sub>2</sub></sub> − µ<sub>A,c<sub>1</sub></sub>) | ||

| + | |||

* MLP posteriors on the target class before and after phone/speaker manipulation are as below. | * MLP posteriors on the target class before and after phone/speaker manipulation are as below. | ||

2020年10月23日 (五) 08:23的版本

目录

Introduction

This paper presented a speech information factorization method based on a novel deep generative model that we called factorial discriminative normalization flow. Qualitative and quantitative experimental results show that compared to all other models, the proposed factorial DNF can retain the class structure corresponding to multiple information factors, and changing one factor will cause little distortion on other factors. This demonstrates that factorial DNF can well factorize speech signal into different information factors.

Members

- Haoran Sun, Lantian Li, Yunqi Cai, Yang Zhang, Thomas Fang Zheng, Dong Wang

Publications

- Haoran Sun, Lantian Li, Yunqi Cai, Yang Zhang, Thomas Fang Zheng, Dong Wang, "Deep Generative Factorization For Speech Signal", 2020. pdf

Source Code

xx

Factorial DNF

xxx

Experiments

Data

The TIMIT database is used in our experiments. The original 58 phones in the TIMIT transcription are mapped to 39 phones by Kaldi toolkit following the TIMIT recipe, and 38 phones (silence excluded) are used as the phone labels. To balance the number of classes between phones and speakers, we select 20 female and 20 male speakers, resulting in 40 speakers in total.

All the speech utterances are firstly segmented into short segments according to the TIMIT phone transcriptions by force alignment. All the segments are trimmed to 200ms; if a segment is shorter than 200ms, we extend it to 200ms in both directions. All the segments are labeled by phone and speaker classes. Afterwards, every segment is converted to a 20 × 200 time-frequency spectrogram by FFT, where the window size is set to 25ms and the window shift is set to 10ms. The spectrograms are reshaped to vectors and are used as input features of deep generative models.

Encoding

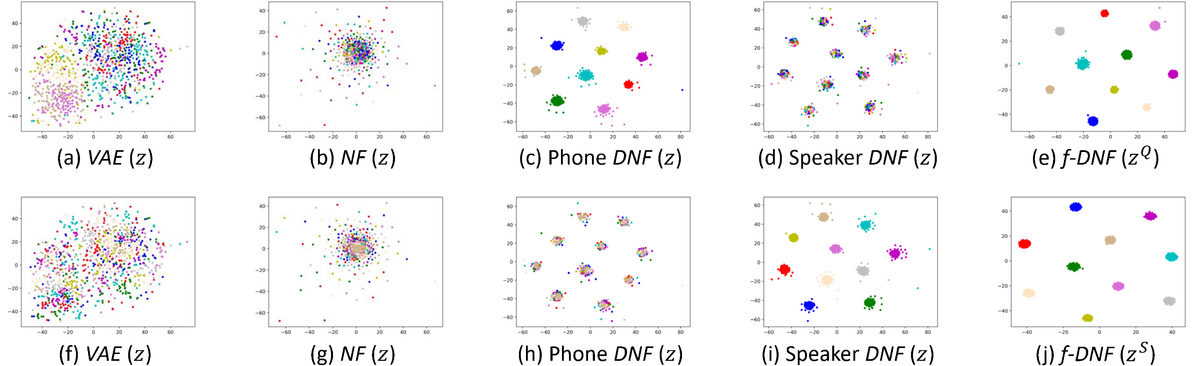

VAE and NF almost lose the class structure;

DNF can retain the class structure of the information factor corresponding to the class labels in the model training;

Factorial DNF can retain the class structure corresponding to all the information factors.

- The latent codes generated by various models are as below, plotted by t-SNE.

In the first row (a) to (e), each color represents a phone; in the second row (f) to (j), each color represents a speaker.

‘Phone DNF’ denotes DNF trained with phone labels; ‘Speaker DNF’ denotes DNF trained with speaker labels.

Factor manipulation

DNF has a stronger capacity than VAE and NF to implement factor manipulation. However, the DNF-based manipulation tends to cause larger distortion on other factors. Factorial DNF has similar even better performance than DNF in terms of factor manipulation, but causes very little distortion on other factors.

Manipulation by the mean-shift approach:

- x' = f(f−1(x) + µA,c2 − µA,c1)

- MLP posteriors on the target class before and after phone/speaker manipulation are as below.

‘f-DNF’ denotes factorial DNF. δ(·) denotes the difference on posteriors p(·|x') and p(·|x)

Phone Manipulation Model | p(q2|x) | p(q2|x') | δ(q2) || p(s|x) | p(s|x') | δ(s) VAE | 0.013 | 0.312 | 0.299 || 0.612 | 0.454 | -0.158 NF | 0.013 | 0.410 | 0.397 || 0.612 | 0.489 | -0.123 DNF | 0.013 | 0.619 | 0.606 || 0.612 | 0.335 | -0.277 f-DNF | 0.013 | 0.636 | 0.623 || 0.612 | 0.536 | -0.076

Speaker Manipulation Model | p(s2|x) | p(s2|x') | δ(s2) || p(q|x) | p(q|x') | δ(q) VAE | 0.010 | 0.303 | 0.293 || 0.520 | 0.509 | -0.011 NF | 0.010 | 0.435 | 0.425 || 0.520 | 0.484 | -0.036 DNF | 0.010 | 0.700 | 0.690 || 0.520 | 0.349 | -0.171 f-DNF | 0.010 | 0.710 | 0.700 || 0.520 | 0.503 | -0.017

Future Work

- Test factorial DNF on larger datasets.

- Establish general theories for deep generative factorization.