OLR Challenge 2017

来自cslt Wiki

目录

AP16 Oriental Language Recognition (AP16-OLR) Challenge

The AP16 OLR challenge is part of the special session "multilingual speech and language processing" on APSIPA 2016. The challenge is a language recognition task for seven oriental languages.

Data

The challenge is based on the AP16-OL7 multilingual database, provided by SpeechOcean (www.speechocean.com) for this challenge. The main features involve:

- Mobile channel

- 7 languages in total

- 24 speakers (18 speakers for training/development, 6 speakers for test).

- 71 hours of speech signals in total

- Transcriptions and lexica are provided

- The data profile is here

- The Licence for the data is here

Evaluation tools

Participation rules

- Participants of both the special session and the AP16-OLR challenge can apply for AP16-OL7 by sending emails to the organizers (see below).

- Agreement for the usage of AP16-OL7 should be signed and returned to the organizer before the data can be downloaded.

- Publications based on AP16-OL7 should cite the following paper:

Dong Wang, Lantian Li, Difei Tang, Qing Chen, AP16-OL7: a multilingual database for oriental languages and a language recognition baseline, submitted to APSIPA 2016.pdf

- The detailed evaluation plan can be found in the above paper as well.

Challenge procedure

- June 06, AP16-OL7 is ready and registration request is acceptable.

- July 30-Aug.1, test data release. Participants can response with their results before Aug. 1, 12:00PM, Beijing time. This submission will be regarded as 'prior submission'.

- Aug. 10, participants can obtain their own results for the prior submission.

- Oct, 2. 12:00 pm, Beijing time, 'full submission' deadline.

- Dec, 10, 12:00 pm, extended submission deadline

- APSIPA16, summary for both prior submissions and full submissions is given on the special session.

Registration procedure

If you are interested to participate the challenge, or if you have any other questions, comments, suggestions about the challenge, please send email to the organizer:

- Dr. Dong Wang (wangdong99@mails.tsinghua.edu.cn)

- Ms. Qing Chen(chenqing@speechocean.com)

AP16-OLR challenge results released

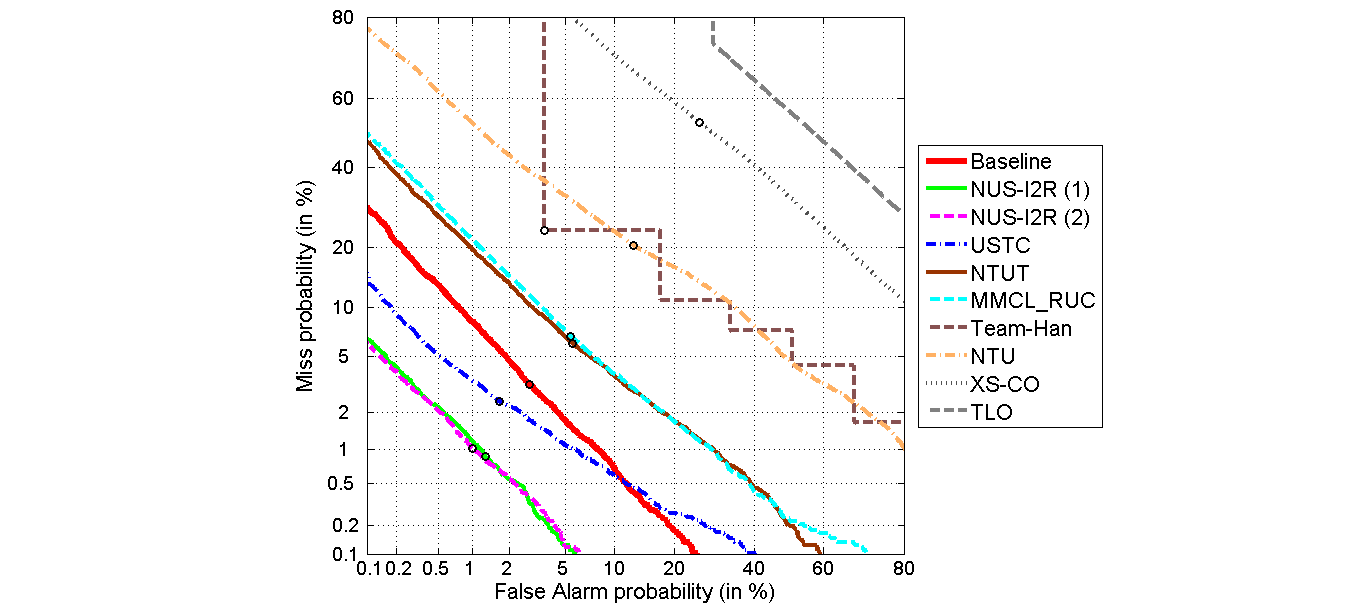

Cavg results

| Rank | Team | Cavg | EET% | minDCF | IDR% | Main researchers |

|---|---|---|---|---|---|---|

| 1 | NUS and I2R (1), Singapore | 1.13 | 1.09 | 0.0108 | 97.56 | Haizhou Li, Hanwu Sun, Kong Aik Lee, Nguyen Trung Hieu, Bin Ma |

| 2 | NUS and I2R (2), Singapore | 1.70 | 1.02 | 0.0101 | 97.60 | Haizhou Li, Hanwu Sun, Kong Aik Lee, Nguyen Trung Hieu, Bin Ma |

| 3 | USTC, China | 1.79 | 2.17 | 0.0205 | 96.94 | Wu Guo |

| 4 | NTUT, Taiwan, China | 5.86 | 5.88 | 0.0586 | 87.02 | Yuanfu Liao , Sing-Yue Wang |

| 5 | MMCL_RUC, China | 6.06 | 6.16 | 0.0610 | 86.21 | Haibing Cao, Qin Jin |

| 6 | PJ-Han, Germany | 14.00 | 17.34 | 0.1365 | 77.65 | Anonymous |

| 7 | NTU, Singapore | 14.72 | 17.44 | 0.1657 | 71.44 | Haihua Xu |

| 8 | XS-CO, China | 36.99 | 40.26 | 0.3924 | 31.91 | Anonymous |

| 9 | TLO, China | 50.00 | 53.34 | 0.4999 | 12.37 | Anonymous |

- Red submissions are better than the baseline by CSLT

- USTC is the only prior submission

- NUS and I2R systems used 40 days

DET curves

More details

- More details are in the slides on the AP16 special session. Click here