“Speaker Recognition on Trivial events”版本间的差异

(以“=Project name= Speaker Recognition on Trivial events =Project members= Dong Wang, Miao Zhang, Xiaofei Kang, Lantian Li, Zhiyuan Tang =Introduction= Trivial event...”为内容创建页面) |

(没有差异)

|

2017年11月2日 (四) 03:44的版本

目录

Project name

Speaker Recognition on Trivial events

Project members

Dong Wang, Miao Zhang, Xiaofei Kang, Lantian Li, Zhiyuan Tang

Introduction

Trivial events are ubiquitous in human to human conversations, e.g., cough, laugh and sniff. Compared to regular speech, these trivial events are usually short and unclear, thus generally regarded as not speaker discriminative and so are largely ignored by present speaker recognition research. However, these trivial events are highly valuable in some particular circumstances such as forensic examination, as they are less subjected to intentional change, so can be used to discover the genuine speaker from disguised speech. In this project, we collect a trivial event speech database and report speaker recognition results on the database, by both human listeners and machines. We want to find out: (1) which type of trivial event conveys more speaker information; (2) who, human or machine, is more apt to identify speakers from these trivial events.

Speaker feature learning

The discovery of the short-time property of speaker traits is the key step towards speech signal factorization, as the speaker trait is one of the two main factors: the other is linguistic content that we have known for a long time being short-time patterns.

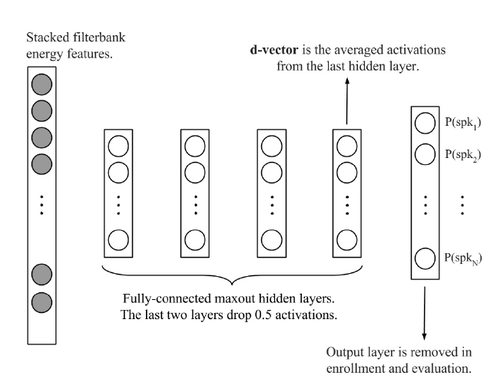

The key idea of speaker feature learning is simply based on the idea of discriminating training speakers based on short-time frames by deep neural networks (DNN), date back to 2014 by Ehsan et al.[2]. As shown below, the output of the DNN involves the training speakers, and the frame-level speaker features are read from the last hidden layer. The basic assumption here is: if the output of the last hidden layer can be used as the input feature of the last hidden layer (a software regression classifier), these features should be speaker discriminative.

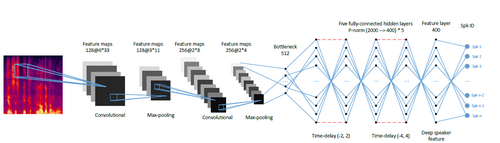

However, the vanilla structure of Ehsan et al. performs rather poor compared to the i-vector counterpart. One reason is that the simple back-end scoring is based on average to derive the utterance-based representations (called d-vectors) , but another reason is the vanilla DNN structure that does not consider much of the context and pattern learning. We therefore proposed a CT-DNN model that can learn stronger speaker features. The structure is shown below[1]:

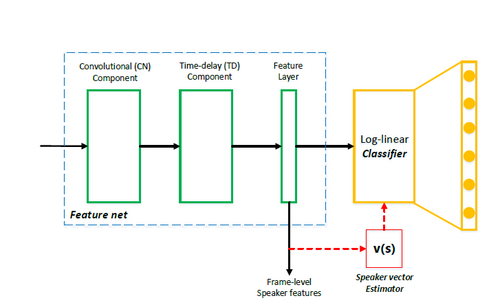

Recently, we found that an 'all-info' training is effective for learning features. Looking back to DNN and CT-DNN, although the features

read from last hidden layer are discriminative, but not 'all discriminative', because some discriminant info can be also impelemented

in the last affine layer. A better strategy is let the feature generation net (feature net) learns all the things of discrimination.

To achieve this, we discarded the parametric classifier (the last affine layer) and use the simple cosine distance to conduct the

classification. An iterative training scheme can be used to implement this idea, that is, after each epoch, averaging the speaker

features to derive speaker vectors, and then use the speaker vectors to replace the last hidden layer. The training will be then

taken as usual. The new structure is as follows[4]:

Database design

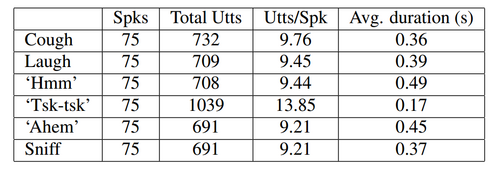

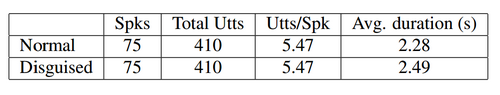

To collect the data, we designed a mobile application and distributed it to people who agreed to participate. The application asked the participants to utter 6 types of trivial events in a random order, and each event occurred 10 times randomly. The random order ensures a reasonable variance of the recordings for each event. The sampling rate of the recordings was set to 16 kHz and the precision of the samples was 16 bits.

We first designed CSLT-TRIVIAL-I which involves 6 trivial events of speech, i.e., cough, laugh, 'hmm', 'tsk-tsk', 'ahem'and sniff.

A speech database CSLT-DISGUISE-I of normal & disguised pairs was also designed.

Data downloading

CSLT-TRIVIAL-I CSLT-DISGUISE-I are free for research. LICENSE is needed. Contact Dr. Dong Wang (wangdong99@mails.tsinghua.edu.cn).

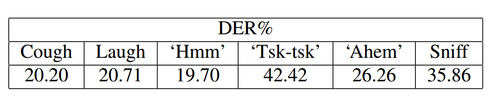

Human performance

Human performed not well on CSLT-TRIVIAL-I, also on CSLT-DISGUISE-I with detection error rate (DER) 47.47%.

Machine performance

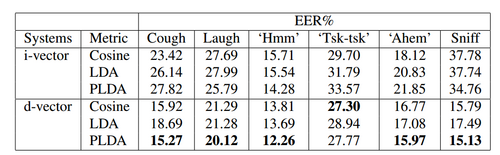

Machine performance on CSLT-TRIVIAL-I.

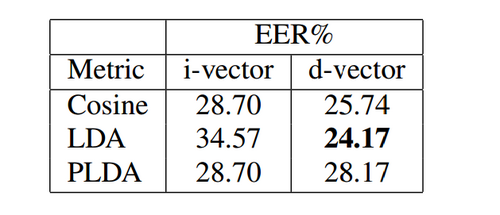

Machine performance on CSLT-DISGUISE-I.

Research directions

- Speech perception.

- Forensic examination.

Reference

[1] Lantian Li, Yixiang Chen, Ying Shi, Zhiyuan Tang, and Dong Wang, “Deep speaker feature learning for text-independent speaker verification,”, Interspeech 2017.

[2] Lantian Li, Dong Wang, Yixiang Chen, Ying Shing, Zhiyuan Tang, http://wangd.cslt.org/public/pdf/spkfact.pdf

[3] Dong Wang,Lantian Li,Ying Shi,Yixiang Chen,Zhiyuan Tang., "Deep Factorization for Speech Signal", https://arxiv.org/abs/1706.01777