“News-2017-12-15”版本间的差异

| (2位用户的7个中间修订版本未显示) | |||

| 第1行: | 第1行: | ||

| − | The Oriental Language Recognition (OLR) | + | The Oriental Language Recognition (OLR) Challenge 2017, co-organized by CSLT@Tsinghua University and Speechocean, was completed with a great success. |

| − | The results have been published in the APSIPA ASC, Dec 12-15, 2017, Kuala Lumpur, Malaysia. | + | The results have been published in the APSIPA ASC, Dec 12-15, 2017, Kuala Lumpur, Malaysia ([[媒体文件:OLR_Challenge_2017_微信稿.pdf|News file/新闻稿]]). |

=== Overview === | === Overview === | ||

There are totally <span style="color:red"> '''31'''</span> teams that registered this challenge. | There are totally <span style="color:red"> '''31'''</span> teams that registered this challenge. | ||

| − | + | Until the deadline of extended submission (Dec. 12), | |

| − | 19 | + | <span style="color:red">'''19'''</span> teams submitted their results completely, |

| − | + | <span style="color:red">'''6'''</span> teams submitted partially or responded actively, | |

| − | and | + | and |

| − | The | + | <span style="color:red">'''6'''</span> teams did not response after the data download. |

| + | The <span style="color:red"> '''19'''</span> complete submissions have been ranked in two lists, | ||

one is by the overall performance (considering the performance on all conditions), | one is by the overall performance (considering the performance on all conditions), | ||

and the other is by the performance on the short-utterance condition. | and the other is by the performance on the short-utterance condition. | ||

| 第16行: | 第17行: | ||

=== Ranking List on Overall Performance === | === Ranking List on Overall Performance === | ||

| − | For the overall performance ranking list, we present the results and details of the <span style="color:red"> '''top 6'''</span> teams whose systems defeated the baseline. | + | |

| + | For the overall performance ranking list, we present the results and details of the <span style="color:red"> '''top 6'''</span> teams whose systems defeated the baseline provided by us. | ||

[[文件:Overall-top6.png | 600px]] | [[文件:Overall-top6.png | 600px]] | ||

| 第24行: | 第26行: | ||

=== Ranking List on Short-Utterance Condition === | === Ranking List on Short-Utterance Condition === | ||

| − | For the short-utterance level ranking list, we present the results and details of the <span style="color:red"> '''top 10'''</span> teams whose systems defeated the baseline. | + | For the short-utterance level ranking list, we present the results and details of the <span style="color:red"> '''top 10'''</span> teams whose systems defeated the baseline provided by us. |

[[文件:Short-utt-top10.png | 400px]] | [[文件:Short-utt-top10.png | 400px]] | ||

2017年12月20日 (三) 07:48的最后版本

The Oriental Language Recognition (OLR) Challenge 2017, co-organized by CSLT@Tsinghua University and Speechocean, was completed with a great success. The results have been published in the APSIPA ASC, Dec 12-15, 2017, Kuala Lumpur, Malaysia (News file/新闻稿).

Overview

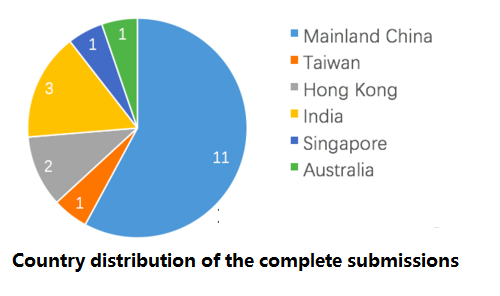

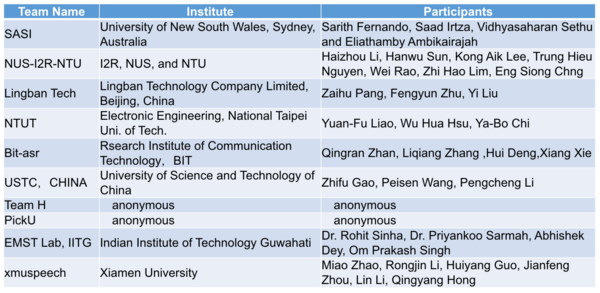

There are totally 31 teams that registered this challenge. Until the deadline of extended submission (Dec. 12), 19 teams submitted their results completely, 6 teams submitted partially or responded actively, and 6 teams did not response after the data download. The 19 complete submissions have been ranked in two lists, one is by the overall performance (considering the performance on all conditions), and the other is by the performance on the short-utterance condition.

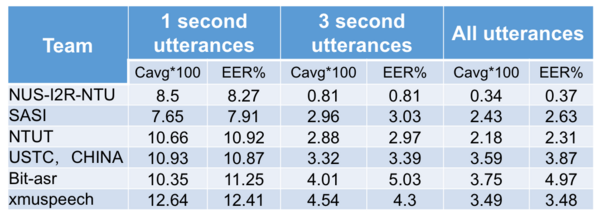

Ranking List on Overall Performance

For the overall performance ranking list, we present the results and details of the top 6 teams whose systems defeated the baseline provided by us.

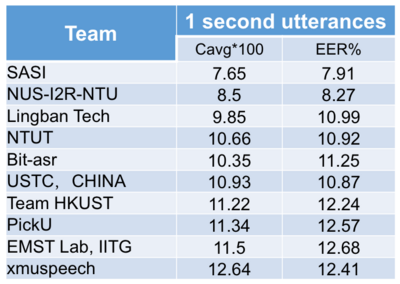

Ranking List on Short-Utterance Condition

For the short-utterance level ranking list, we present the results and details of the top 10 teams whose systems defeated the baseline provided by us.

For more details of the OLR challenge, please see its homepage.